The Artificial intelligence fire that was ignited in 2023 rages on in the new year. Meta has announced the purchase of 350,000 Nvidia H100s, currently the world’s most advanced Graphics Processing Unit (GPU). By the end of 2024 Meta will hold ~14% of all H100s in existence, spending an estimated US$10.5b in 2024 alone.

For perspective, this is a Boeing-sized order, and certainly one of the largest single orders ever in the world of semiconductors.

Meta datacentres: the future home to US$ billions of GPUs

Source: Meta

Data centres have been ground zero for AI, hosting both the heightened levels of model training as well as emerging inference. The need for further data centre computation will grow meaningfully in 2024. However, unlike 2023, we believe they will not be the only area to undergo an AI powered step change.

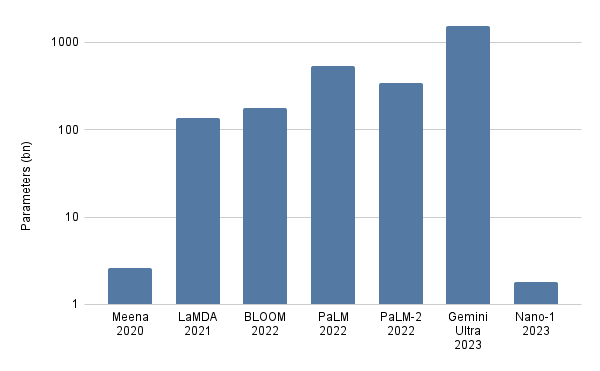

It appears that the next phase for AI will not be from colossal large language models (LLMs) like Open AI’s 1 trillion+ parameter GPT 4 or Alphabet’s Gemini Ultra, but cut down versions that are more than 99% smaller. Small enough that these models can be deployed “at the edge” – meaning on consumer devices like phones or laptops.

Interestingly, large models have demonstrated diminishing performance to scale for several years, forcing developers to logarithmically scale their parameters for incremental performance increases. The result was that during 2023, LLMs moved definitively toward minimising model size. We see that with Alphabet’s Nano model, Microsoft’s Phi as well as others.

A selection of Alphabet LLM models over time

Source: Alphabet, Y-axis is logarithmic

Parallel to these architectural developments, semiconductor designers have had the breathing room to significantly beef up on-device memory. This addition will meaningfully differentiate the high-end edge semiconductors from the low-end competitors as the memory constraints present a limit on model size.

This is a superb outcome for companies such as Qualcomm, which offers a mobile phone chip tailor-made to utilise these cut-down AI models. The company is also set to debut its first PC offering in the middle of the year and phone and PC sales are recovering from cyclical weakness. Other consumer electronics-facing semiconductor designers, like Nvidia and Advanced Micro Devices, are also well positioned. All of these chips will continue needing at-scale fabrication, much of which will be handled by Taiwan Semiconductor Manufacturing.

Why does this matter?

The practical or business meaning of significant changes to the powerful tools that are increasing the pace of disruption isn’t always obvious at first. Few predicted during the lead-up to 3G mobile telephony that video calls over FaceTime and WhatsApp would quickly morph into expanded Netflix viewing and the growth of car services like Uber, but all of these services and thousands more evolved because of the expanded carriage capacity of mobile technology.

In all probability, the equivalent AI product that will disrupt a seemingly unrelated industry already exists, though may not be easily identified – yet. This same company is probably also subscale and indistinguishable from broader AI excitement. Although some beneficiaries are visible (more on this later), we believe that playing AI through the existing bottlenecks remains a favourable risk adjusted strategy.

In the main, AI is likely to benefit most and disrupt many. And we need to be careful about the hype. AI is increasingly being presented as a feature within every piece of hardware and software. A new term has arisen; FICTA – failed in crypto, trying AI. Nobody wants to be left behind and every company will throw their hat into the mix regardless of feasibility. We saw first hand that this was the name of the game this year at the Consumer Electronics Show (CES) in Las Vegas: artificial intelligence (AI) “enabled” companies jostling to showcase their exposure to the megatrend.

Warning. Avoid the hype

We believe it is too early to know the definitive beneficiaries, especially when there exist business models which already benefit from growth in aggregate AI usage and can also augment themselves with AI functionality.

CrowdStrike is an example of this. AI is a flywheel for the cloud. More AI requires more model training and inference – both of which nearly always occur on the cloud. Companies with growing interest in these products will need to increase their cloud usage. As the overall cloud presence grows, the number of targets for cyber attack increases. Generative AI also allows for the generation of human-like text at scale to more convincingly conduct phishing or spam.

Demand for cybersecurity is set to rise simply due to the heightened cyber threat posed by generative AI. However, CrowdStrike also incorporates AI into its service using a predictive model to address likely avenues of attack as well as using a LLM to interface with clients (cybersecurity clients often operate the services they use with little to no prior expertise). This gives the company two AI-based ramps without having to build a new product from scratch in the last twelve to eighteen months.

Gitlab is a similar opportunity, in their case relying on code generative AI leading to an increased volume of software development. LLMs have an outsized predilection for code generation. We believe this will put downward pressure on the cost of software development and increase the overall amount of software produced.

More software requires more storage, deployment and maintenance (collectively known as DevOps) for code. GitLab is a DevOps provider, operating the world’s second largest code repository. DevOps themselves can be overlaid with AI functionality such as automated code review management and assisted code integration.

Even though these companies may ultimately underperform the most successful AI application (assuming it can be found), we believe that funds management is an exercise in valuation. Our strategy saw us through a bleak 2022 and bumper 2023. Now, as we approach a (seemingly) more stable 2024, we are confident that investing in disruption and adherence to the valuation process will keep us in good stead.

Share this Post